Evaluating dependability of black box AI methods

Artificial intelligence methods are now seeping into healthcare, medicine, agriculture and food sectors. Keeping the buzz aside, such methods are increasingly efficient at addressing a task at hand. The area of food safety is not unknown to these technological developments in big data and contemporary machine learning methods. For example, use of AI as part of analytical measurement procedures, internet of things (IoT) enabled analytical devices, use of predictive analytics algorithms for crunching large data sets, to list a few. But how does one validate such methodologies, especially when the stakes are high, with human health and consumer safety involved.

Novel machine learning algorithms are often touted to be ‘black boxes’, even though they demonstrate powerful performance. This issue consistently raises eyebrows even with high fidelity algorithms. Data scientists often keep aside ‘test’ data on which performance of the developed AI method is evaluated. Even though such statistical procedures are considered good practices to evaluate model performance, such methodologies are not sufficient to establish fitness-for-purpose or meet regulatory requirements.

Unravelling black box AI

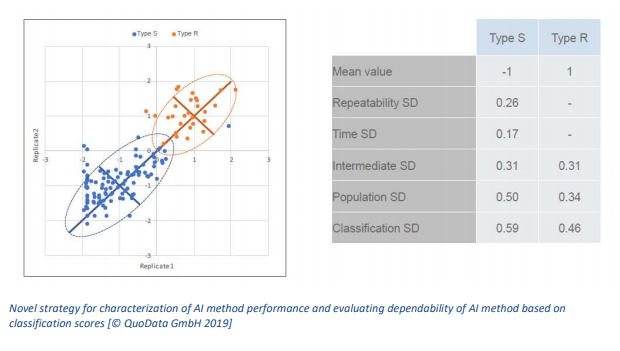

At QuoData, very early on we have identified the importance of not only unraveling this AI black box, but also the need to develop strategies to demonstrate dependability and reliability of AI methods.With this aim in mind, we are expanding and evolving new strategies with sound mathematical basis to evaluate the dependability of AI methods to fulfill an intended use. This way, government or regulatory agencies can evaluate new methods, producers can have improved confidence in their products and services, and consumers can be less worried from health and safety perspective.